|

Lisa Alazraki

Hi! I am a PhD student in the NLP Group at Imperial College London, advised by Marek Rei.

I am broadly interested in generalisable learning, OOD robustness, and the relationship between reasoning and language.

|

|

NewsDec 2025 Going to NeurIPS to present our work on Reinforcement Learning for Reverse Engineering (RLRE) ✈️ Nov 2025 I am giving an invited flash talk at the Breaking Topics in AI Conference on Monday 24th November at I-X Imperial Sep 2025 Reverse Engineering Human Preferences with Reinforcement Learning is accepted to NeurIPS as a spotlight ✨ Sep 2025 No Need for Explanations: LLMs can implicitly learn from mistakes in-context is accepted to EMNLP as an oral 🎉 Aug 2025 We have released the AgentCoMa benchmark! |

Research |

|

|

AgentCoMa: A Compositional Benchmark Mixing Commonsense and Mathematical Reasoning in Real-World Scenarios

Lisa Alazraki, Lihu Chen, Ana Brassard, Joe Stacey, Hossein A. Rahmani, Marek Rei arXiv 2025 paper / dataset / code / website |

|

|

How to Improve the Robustness of Closed-Source Models on NLI

Joe Stacey, Lisa Alazraki, Aran Ubhi, Beyza Ermis, Aaron Mueller, Marek Rei arXiv 2025 paper / code |

|

|

Reverse Engineering Human Preferences with Reinforcement Learning

Lisa Alazraki, Tan Yi-Chern, Jon Ander Campos, Maximilian Mozes, Marek Rei, Max Bartolo NeurIPS 2025 (Spotlight) paper |

|

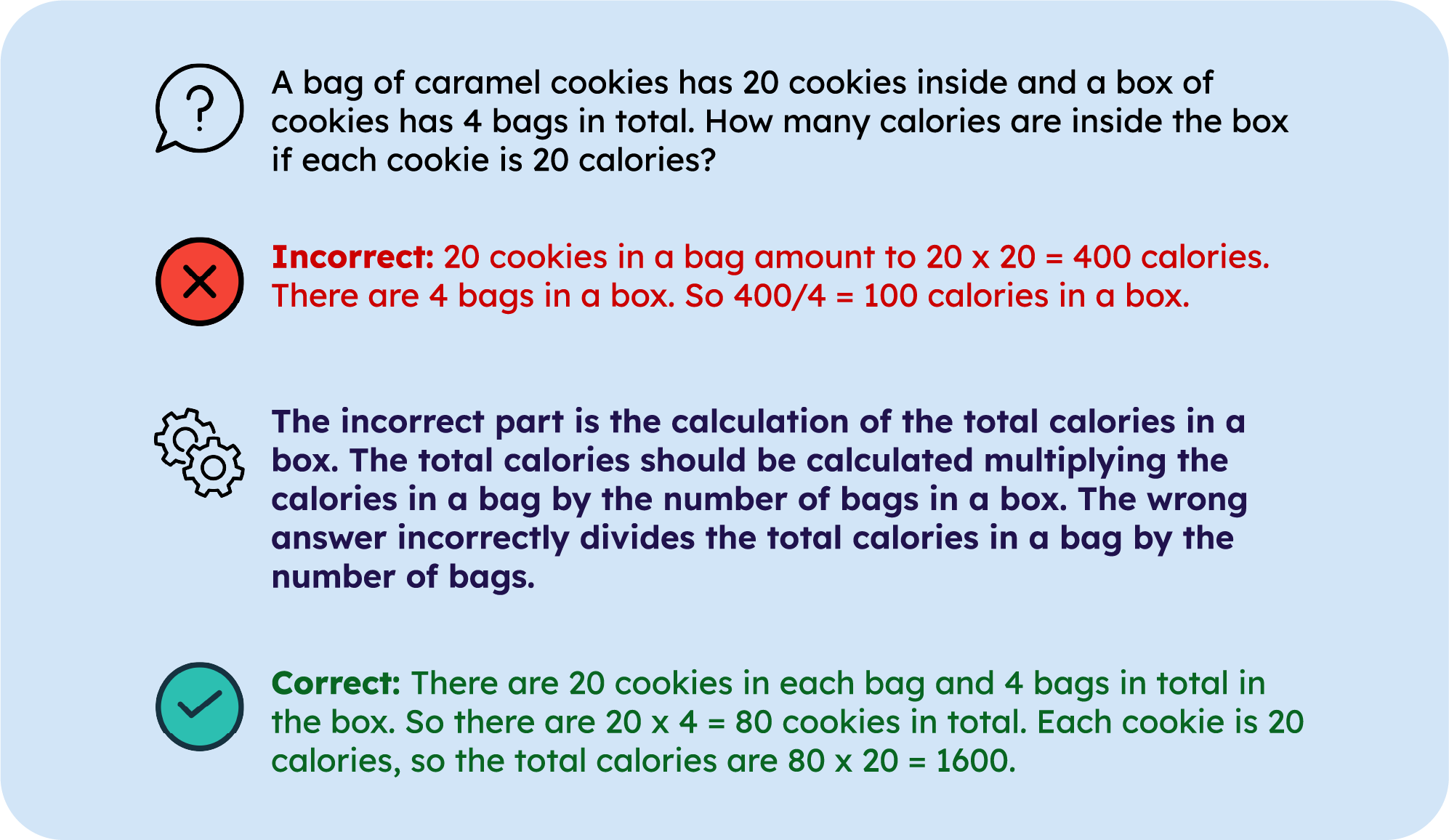

No Need for Explanations: LLMs can implicitly learn from mistakes in-context

Lisa Alazraki, Maximilian Mozes, Jon Ander Campos, Tan Yi-Chern, Marek Rei, Max Bartolo EMNLP 2025 (Oral) paper / code |

|

|

Enhancing LLM Robustness to Perturbed Instructions: An Empirical Study

Aryan Agrawal*, Lisa Alazraki*, Shahin Honarvar, Marek Rei (*Equal contribution) ICLR 2025 BuildingTrust paper / code |

|

|

How Can Representation Dimension Dominate Structurally Pruned LLMs?

Mingxue Xu, Lisa Alazraki, Danilo P. Mandic ICLR 2025 SLLM paper |

|

|

Meta-Reasoning Improves Tool Use in Large Language Models

Lisa Alazraki, Marek Rei NAACL 2025 Findings paper / code |

|

|

How (not) to ensemble LVLMs for VQA

Lisa Alazraki, Lluis Castrejon, Mostafa Dehghani, Fantine Huot, Jasper Uijlings, Thomas Mensink NeurIPS 2023 ICBINB paper |

TeachingI am a Teaching Assistant for 70050 Intro to Machine Learning, 70016 Natural Language Processing, 70010 Deep Learning and 40008 Graphs and Algorithms. |

Supervision2025 Kevin Zhou 'Optimal Data Mixing Strategies at Different Token Budgets for Language Model Training', MSc dissertation, co-supervised with Marek Rei (Imperial College London) and Kris Cao (Cohere). 2024 Aryan Agrawal 'Improving the Robustness of LLMs to Prompt Perturbations', MSc dissertation, co-supervised with Marek Rei (Imperial College London), Thomas Mensink (Google Research) and Shahin Honarvar (Imperial College London). 2023 Ziheng Zhang 'Knowledge-Enhanced Supportive Memory for Chatbots', MEng dissertation, co-supervised with the Algorithmic Human Development group (Imperal College London) and Zissis Poulos (University of Toronto). 2022 Alicia Law 'A Multilingual Chatbot for Self-Attachment Therapy', MSc dissertation (Distinguished Dissertation award), co-supervised with the Algorithmic Human Development group (Imperial College London). |

Service

Conference reviewing: ACL 2025, AACL 2025, COLING 2025, COLM 2025, EACL 2025, EMNLP 2025, NAACL 2025

|

|

Forked from Jon Barron's website. |